NASA astronauts Kate Rubins and Andre Douglas walk through the lunar-like landscape during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas holds open a sample bag for NASA astronaut Kate Rubins as she pours some geology samples into it during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas uses a rake to pour “lunar” samples into a sample bag during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas leads the way while NASA astronaut Kate Rubins follows behind with a lunar tool cart during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronauts Kate Rubins and Andre Douglas look ahead at their traverse during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

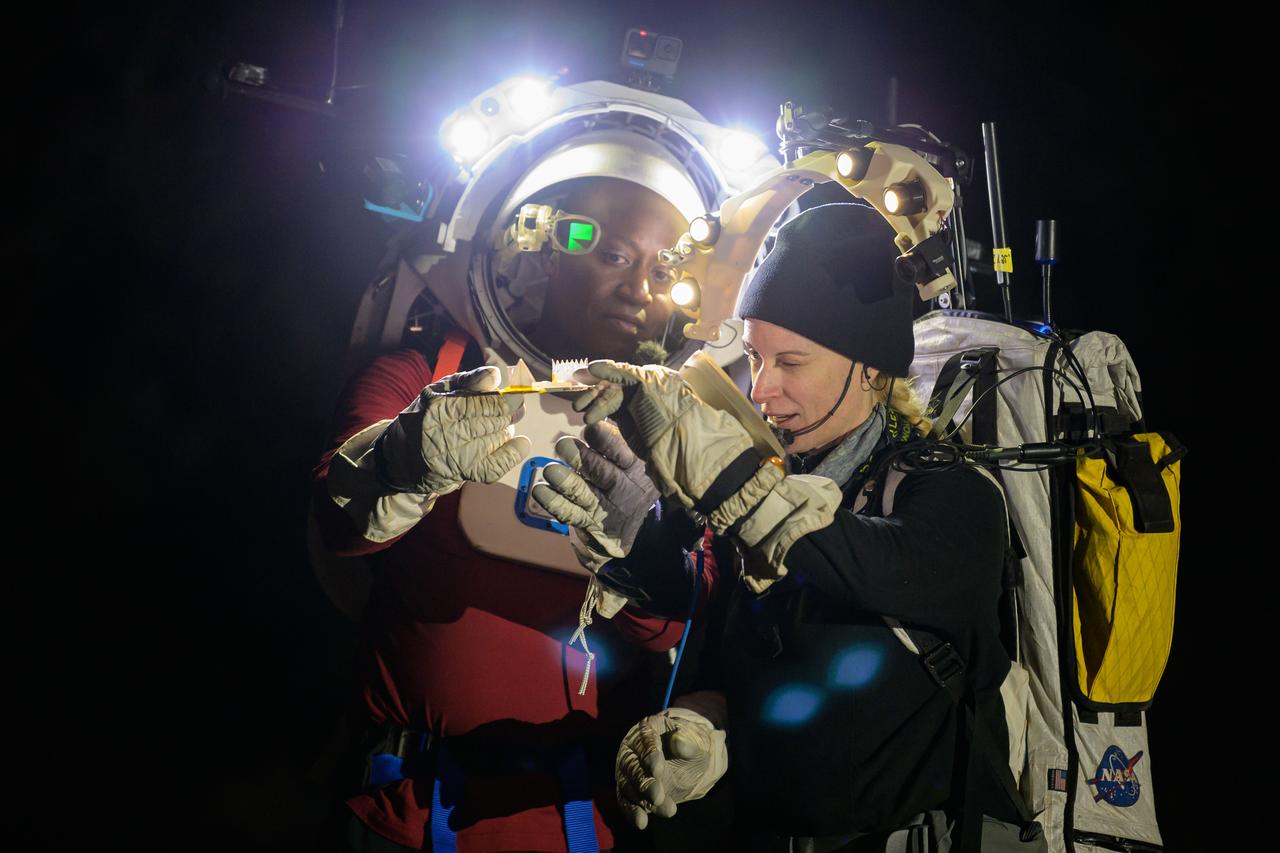

NASA astronauts Andre Douglas and Kate Rubins during a nighttime advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. Douglas is wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins uses a chisel to collect a small geologic sample during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas uses a scoop to dig into the ground to collect geologic samples during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas takes a picture of the surrounding lunar-like landscape during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas pours a scoopful of geologic samples into a sample bag during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins uses the hand controller on her wrist to display information while wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

An engineer helps NASA astronaut Kate Rubins adjust the lens on the Joint AR (Joint Augmented Reality Visual Informatics System) display she’s wearing during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 19, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins walks through the lunar-like landscape wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 19, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas views the lunar-like landscape at dusk while wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins walks through the lunar-like landscape wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 19, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins uses the hand controller on her wrist to display information while wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 19, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas wears AR (Augmented Reality) display technology during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The monocular lens consists of a pico-projector and waveguide optical element to focus an image for crew to see their real world overlaid with digital information. These unique near-eye form factors may be used to improve the usability and minimally impact the complex biomechanics of working in a pressurized suit environment. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas wears AR (Augmented Reality) display technology during a nighttime advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The monocular lens consists of a pico-projector and waveguide optical element to focus an image for crew to see their real world overlaid with digital information. These unique near-eye form factors may be used to improve the usability and minimally impact the complex biomechanics of working in a pressurized suit environment. Credit: NASA/Josh Valcarcel

Engineers help NASA astronaut Andre Douglas adjust the Joint AR (Joint Augmented Reality Visual Informatics System) display he’s wearing during a nighttime advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins opens the sun visor on the Joint AR (Joint Augmented Reality Visual Informatics System) display she’s wearing during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 19, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas wears AR (Augmented Reality) display technology during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The monocular lens consists of a pico-projector and waveguide optical element to focus an image for crew to see their real world overlaid with digital information. These unique near-eye form factors may be used to improve the usability and minimally impact the complex biomechanics of working in a pressurized suit environment. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas wears the Joint AR (Joint Augmented Reality Visual Informatics System) display during a nighttime advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins pushes a cart through the lunar-like landscape while wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 19, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas wears AR (Augmented Reality) display technology during a nighttime advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The monocular lens consists of a pico-projector and waveguide optical element to focus an image for crew to see their real world overlaid with digital information. These unique near-eye form factors may be used to improve the usability and minimally impact the complex biomechanics of working in a pressurized suit environment. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins uses tongs to collect geologic samples while wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins uses tongs to pick up a geologic sample while wearing the Joint AR (Joint Augmented Reality Visual Informatics System) display during an advanced technology run in the San Francisco Volcanic Field in Northern Arizona on May 21, 2024. The suit display features include navigation, photo capture, graphical format of consumables, procedure viewing, mission control updates, and other augmented reality cues and graphics. The team successfully tested navigation displays using data from four different data streams: GPS (Global Positioning System)/IMU (Inertial Measurement Unit), camera/IMU, LiDAR (Light Detection and Ranging), and static maps. Technology like this may be used for future Artemis missions to augment mission control communication and help guide crew back to the lunar lander. Credit: NASA/Josh Valcarcel

NASA teams work to set up the base camp for the Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 (JETT5) in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

Engineers Juan Busto (left) and Mike Miller (right) work to install the communications network for the base camp during the Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 (JETT5) in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

NASA teams work to set up a one of many tents that will serve as the base camp for the Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 (JETT5) in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

NASA teams work to set up the base camp for the Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 (JETT5) in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

Test deputy field manager Angela Garcia ties down a tent that will serve as the base camp for the Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 (JETT5) in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

NASA teams work to set up one of many tents that will serve as the base camp for the (JETT5) Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

Spacesuit engineers Sheldon Stockfleth and Christine Jerome work to set up the base camp for the Joint Extravehicular Activity and Human Surface Mobility Test Team Field Test 5 (JETT5) in the San Francisco Volcanic Field in Northern Arizona on May 11, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Kate Rubins takes a picture of a geologic sample during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel

NASA astronaut Andre Douglas takes a closer look at the geologic samples he collected during a simulated moonwalk in the San Francisco Volcanic Field in Northern Arizona on May 17, 2024. Credit: NASA/Josh Valcarcel